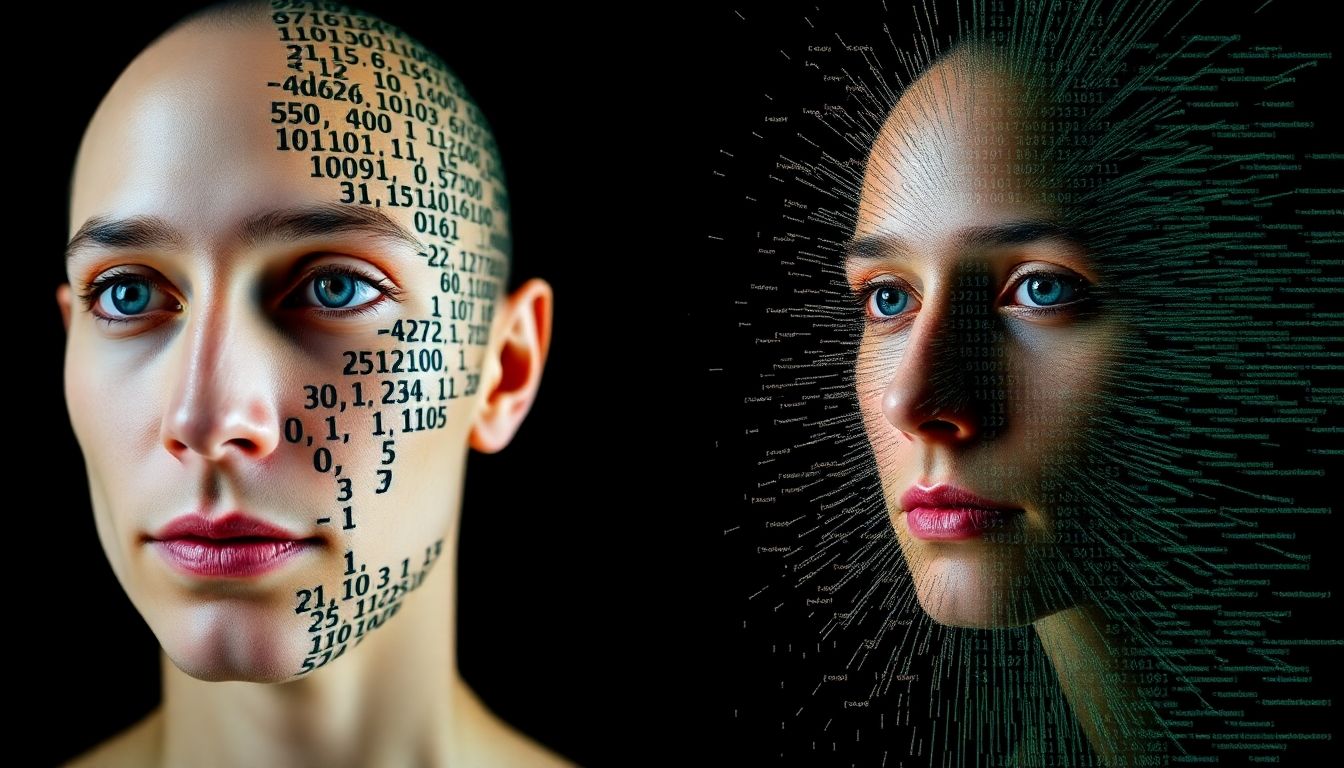

Recent events have highlighted the escalating threat posed by sophisticated deepfake technology, with AI-generated content now capable of creating highly realistic fake news broadcasts and impersonations. The increasing quality and accessibility of these tools raise significant concerns for democratic processes, election integrity, and public trust in media.

Key Takeaways

AI-generated deepfakes are becoming increasingly sophisticated and difficult to detect.

The technology poses a significant threat to democratic processes, particularly during elections.

Misuse of deepfakes extends to non-consensual pornography and fraud.

Regulators and tech companies are racing to develop countermeasures, but the technology is evolving rapidly.

Public awareness and critical evaluation of online content are crucial.

The Escalating Threat of Deepfakes

A recent presidential campaign debate was marred by the circulation of a high-quality deepfake video depicting a candidate announcing their withdrawal. This AI-generated clip, integrated into a realistic-looking fake RTÉ News broadcast, shocked even AI experts with its quality. Researchers noted the sophisticated blend of genuine and AI-generated footage, accurate graphics, and correct pronunciation of names, suggesting a level of craft and potentially local knowledge not seen in previous deepfakes.

This incident underscores a growing concern: the threat level posed by deepfakes has significantly increased. Experts like Aidan O'Brien from the European Digital Media Observatory believe such manipulated media represents a superior quality compared to earlier examples, raising alarms about its potential to disrupt democratic processes.

Deepfakes in Global Elections and Politics

The issue is not confined to one region. In the New York City mayoral race, a campaign briefly posted a racist AI-generated video attacking an opponent. In the US state of New Hampshire, voters received AI-generated audio impersonating Joe Biden, discouraging participation in a primary election. Similarly, fake audio clips circulated before Slovakia's general election, potentially influencing the outcome.

Isabel Linzer of the Centre for Democracy and Technology warns that "we’ve only seen the tip of the iceberg" regarding AI's impact on elections. She stresses that the technology is improving, and bad actors are becoming more comfortable using it, urging companies and governments to prioritize election integrity year-round.

Regulatory Responses and Challenges

In Ireland, electoral commissions and media regulators have responded swiftly to deepfake incidents, escalating issues to social media platforms and reminding them of their obligations under the EU Digital Services Act. While Meta removed a deepfake video within hours, it had already been widely shared. X (formerly Twitter) opted to label posts as "manipulated media."

Globally, governments are grappling with regulating AI. Legislation like the EU AI Act aims to address these challenges, but the rapid evolution of the technology leaves regulators struggling to keep pace. The creation of the Catherine Connolly deepfake, for instance, suggests that guardrails on mainstream AI models were breached, with systems available that allow the creation of deepfakes of known individuals without consent.

The Dual Nature of Deepfake Technology

While deepfakes pose serious threats, they also have legitimate uses in areas like entertainment, video game audio, and customer support. However, the potential for misuse, including blackmail, reputation harm, fraud, and political manipulation, is significant. The technology's ability to spread false information that appears to originate from trusted sources erodes trust in genuine media and can lead to severe real-world consequences.

The Ongoing Arms Race: Detection and Defence

Scientists and researchers are engaged in a continuous "arms race" to develop methods for detecting deepfakes. Techniques range from analyzing subtle physical inconsistencies, such as light reflections in the eyes, to employing sophisticated AI-based algorithms that examine patterns in movement and speech. Despite advancements in detection, the technology for generating deepfakes is also rapidly improving, making it increasingly difficult to distinguish real from fake.

Ultimately, combating the threat of deepfakes requires a multi-faceted approach. This includes technological solutions for detection and prevention, robust regulatory frameworks, and, crucially, enhanced public awareness and media literacy to encourage critical evaluation of online content.